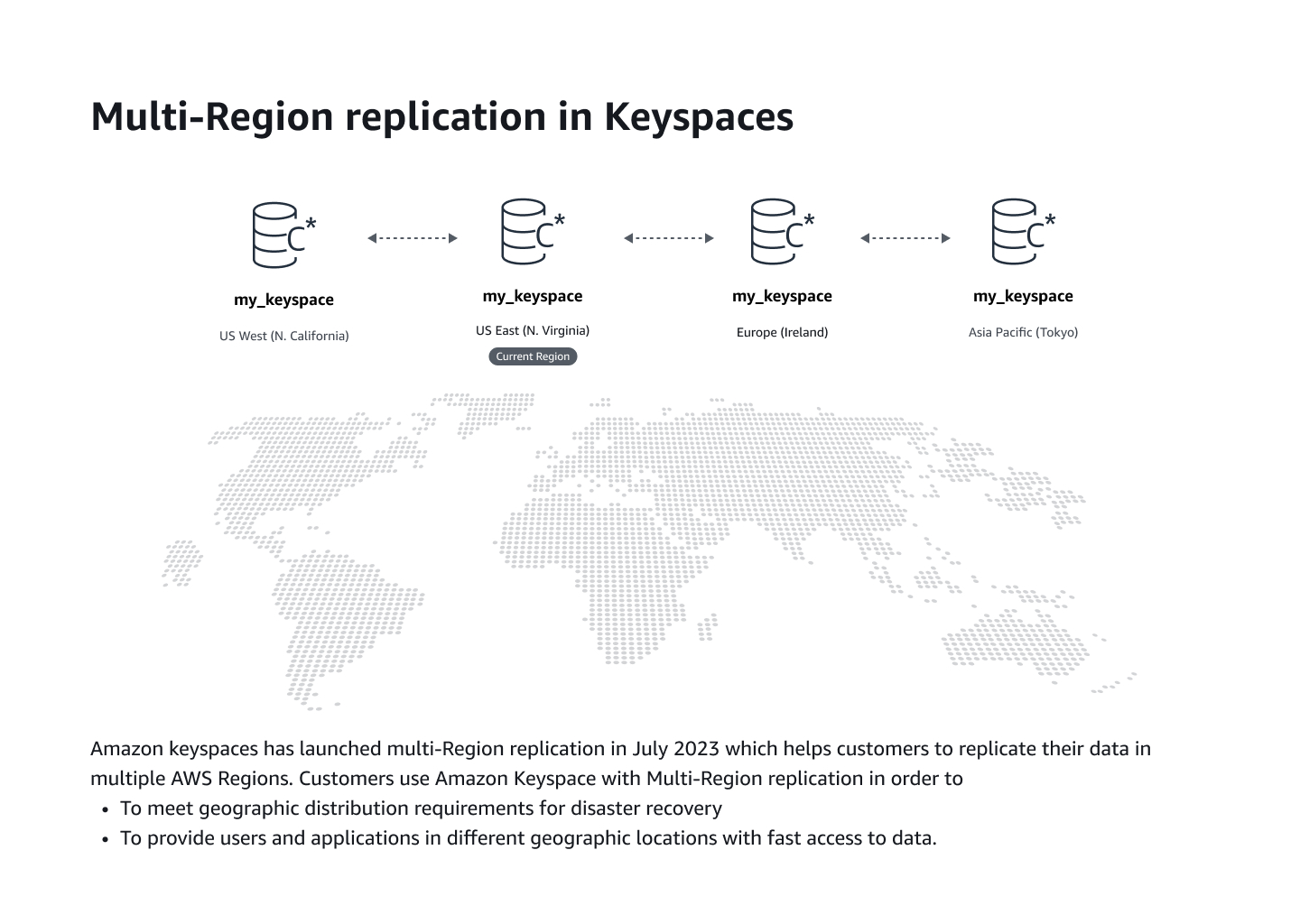

Amazon Keyspaces Multi-Region Replication

Summary

Led the design of Multi-Region Replication for Amazon Keyspaces, enabling customers to replicate their Apache Cassandra workloads across multiple AWS Regions. The project was delivered in two phases - initial launch of multi-region capabilities and subsequent management features for existing keyspaces.

Problem

Customers running large Cassandra deployments faced significant operational overhead:

- Manual management of cross-region replication and data synchronization

- Complex repair operations to maintain data consistency

- No native way to replicate data in Amazon Keyspaces across regions

- Limited disaster recovery and geographic distribution capabilities

This particularly impacted enterprises like Netflix and Capital One who run thousands of Cassandra nodes across multiple data centers.

Target Customers

- Enterprise developers managing large-scale Cassandra deployments (100s-1000s of nodes)

- Organizations requiring geographic data distribution

- Companies with strict disaster recovery requirements

- Existing Cassandra users looking to migrate to managed services

Research showed 50% of Cassandra users require multi-region replication capabilities.

Success Metrics

Adoption

- Percentage of new keyspaces created with multi-region replication

- Adoption rate among existing vs new customers

Task Completion

- Success rate of keyspace creation with multi-region setup

- Success rate of table creation in multi-region keyspaces

- Success rate of region addition/removal operations (Phase 2)

Usability

- Time to complete multi-region keyspace creation

- Time to complete table creation in multi-region setup

- Customer support ticket volume related to replication

- Drop-off rates in creation flows

Solution

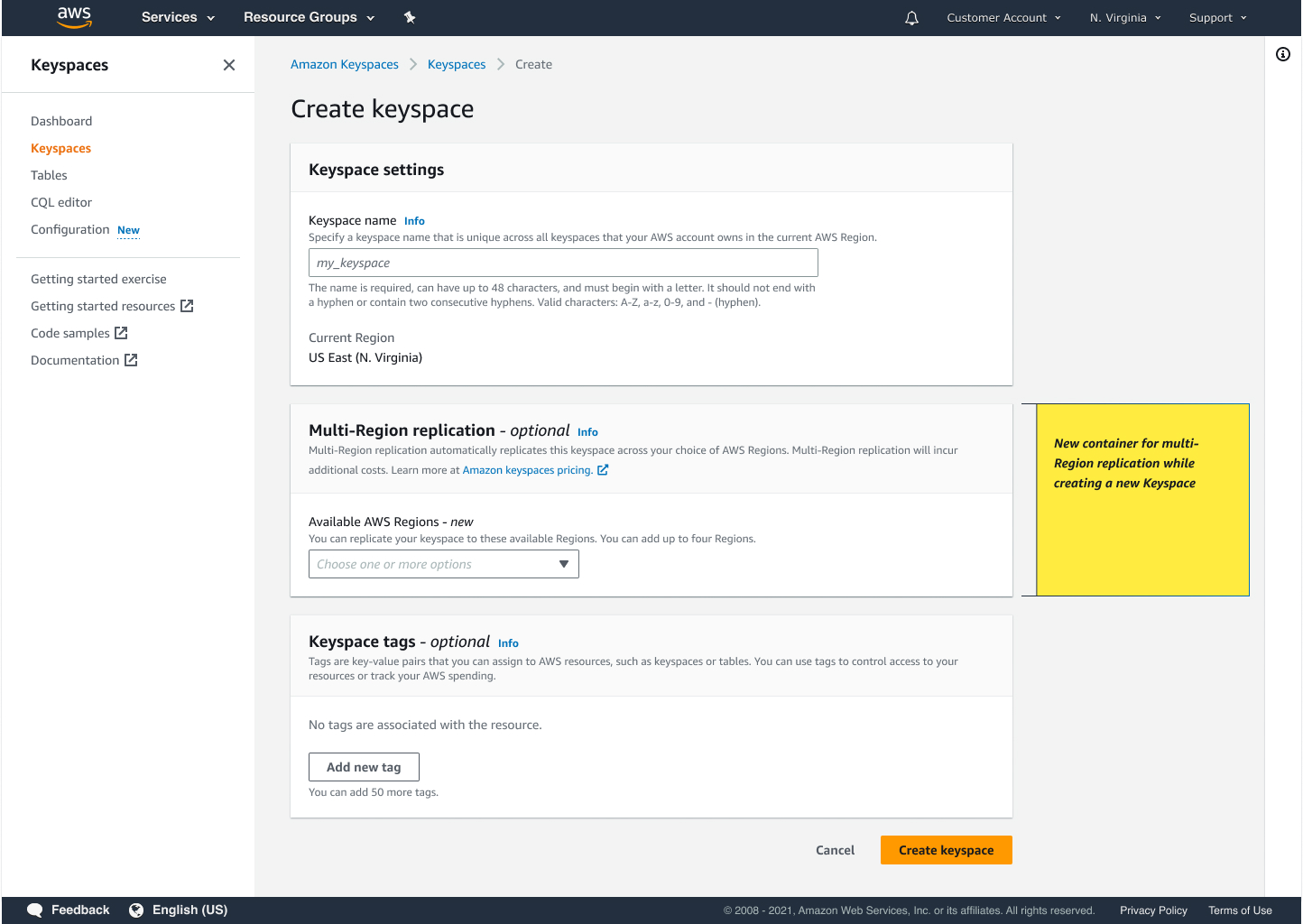

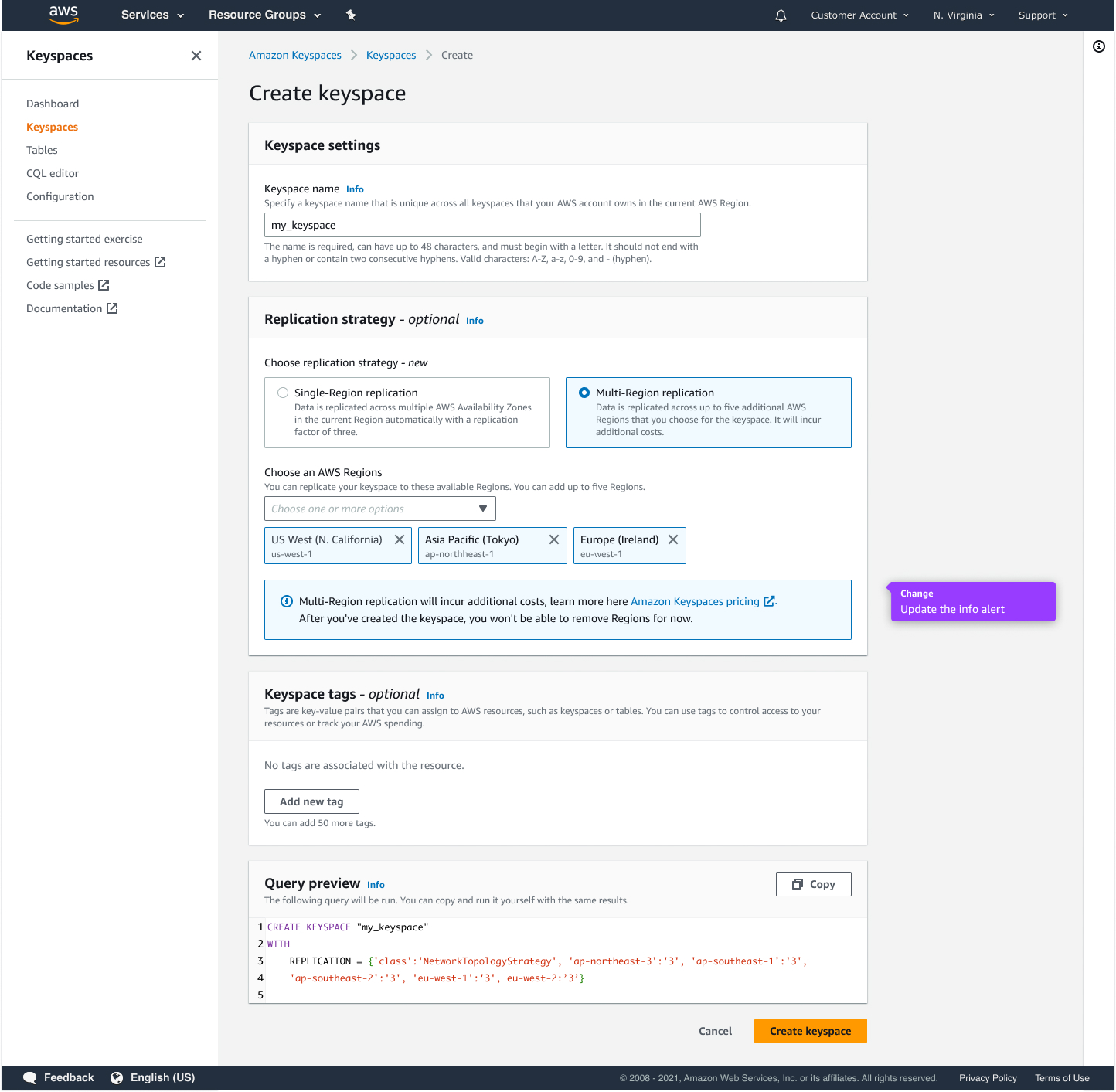

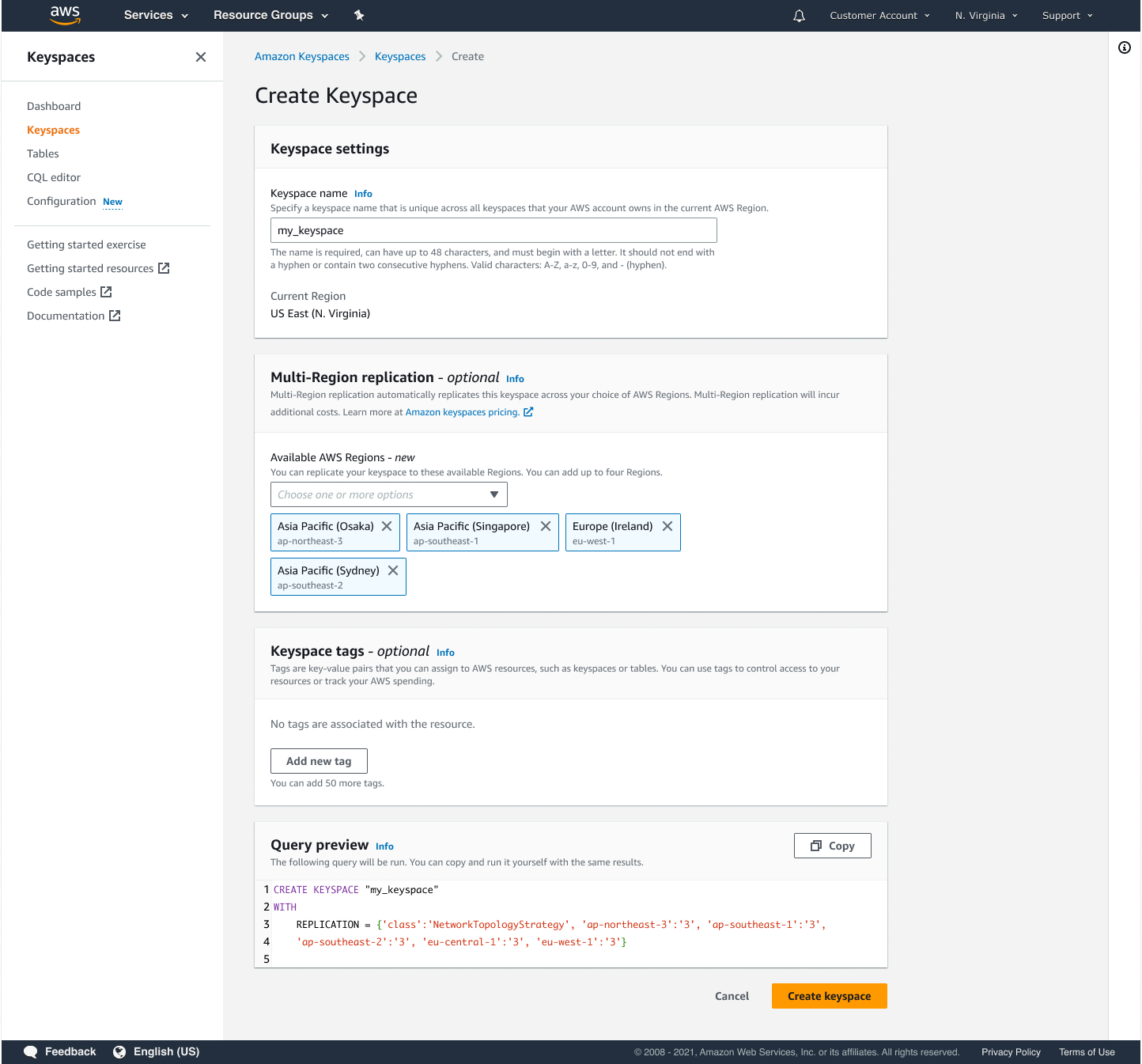

Phase 1: Initial Launch

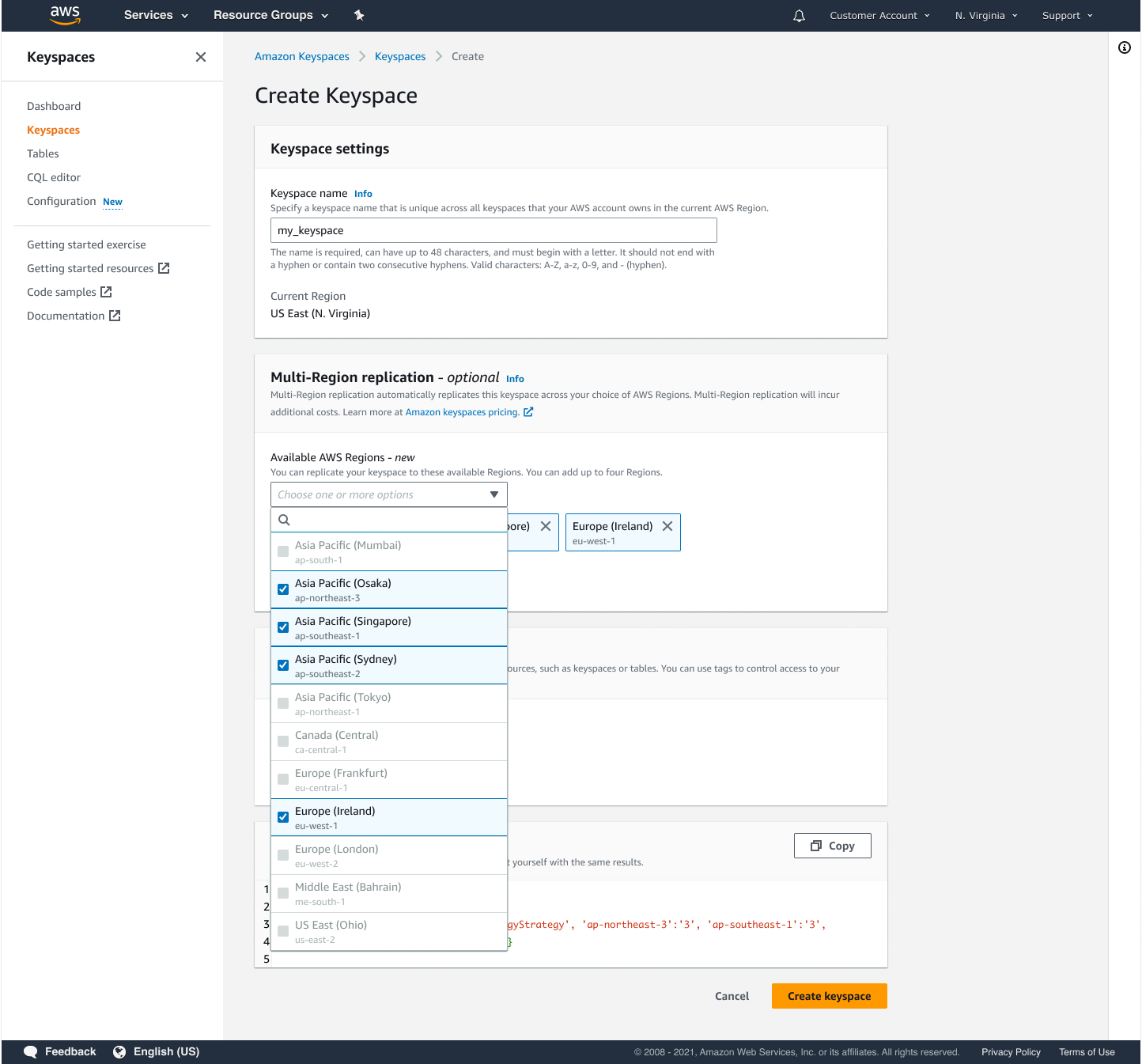

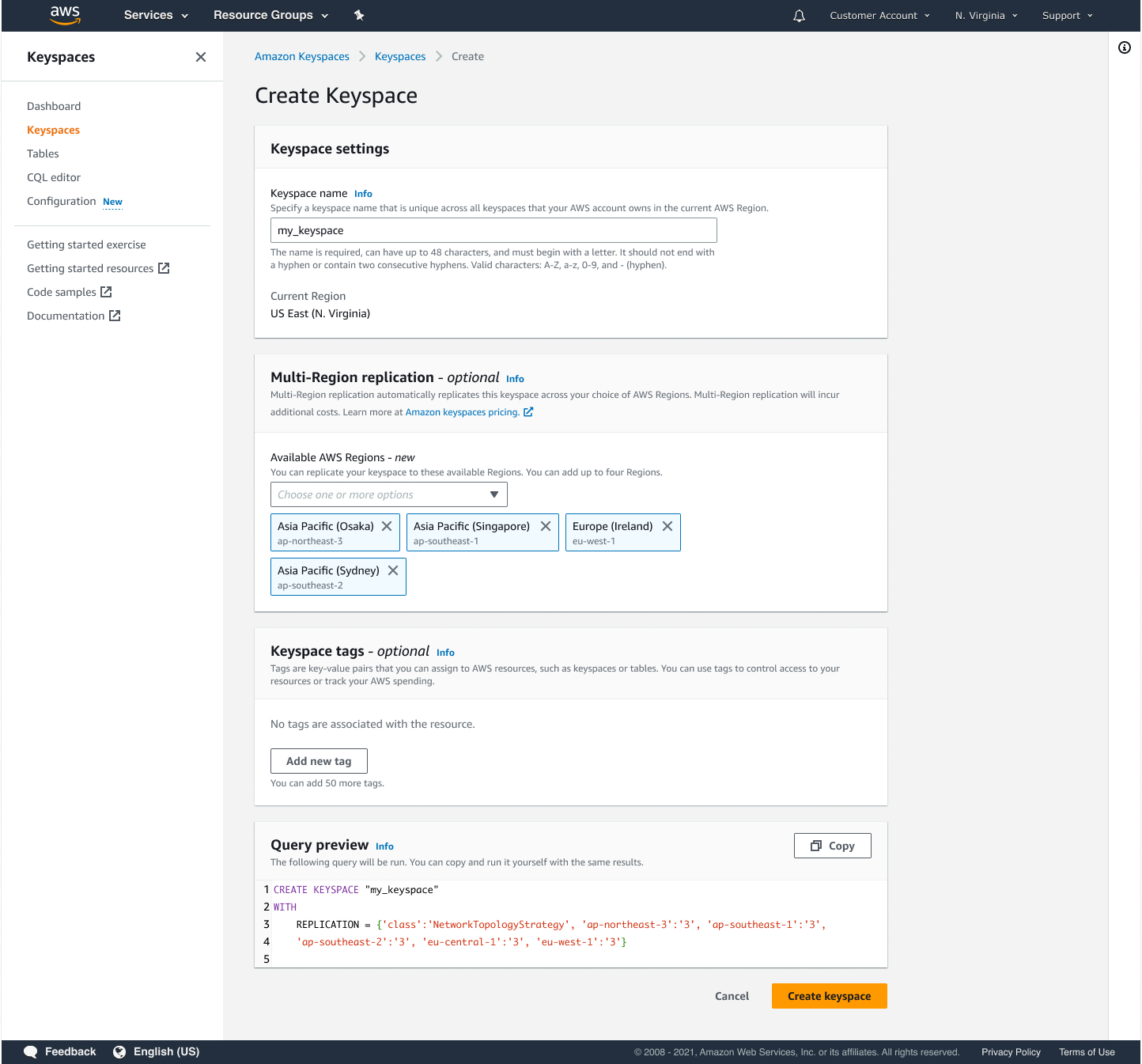

- Multi-region keyspace creation supporting up to 5 AWS regions

- Automated cross-region replication setup with inherited table strategies

- Comprehensive monitoring with replication latency metrics and status tracking

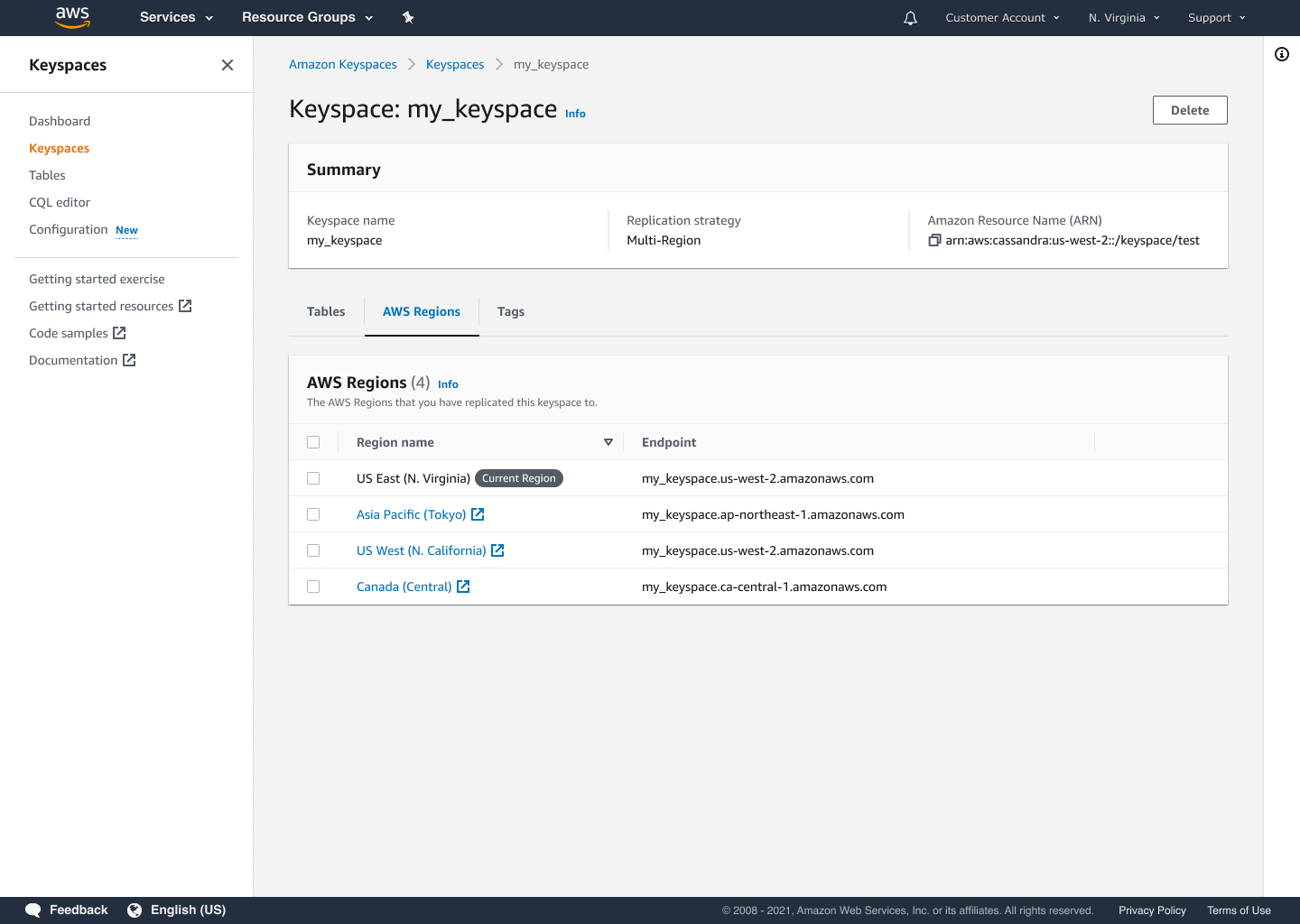

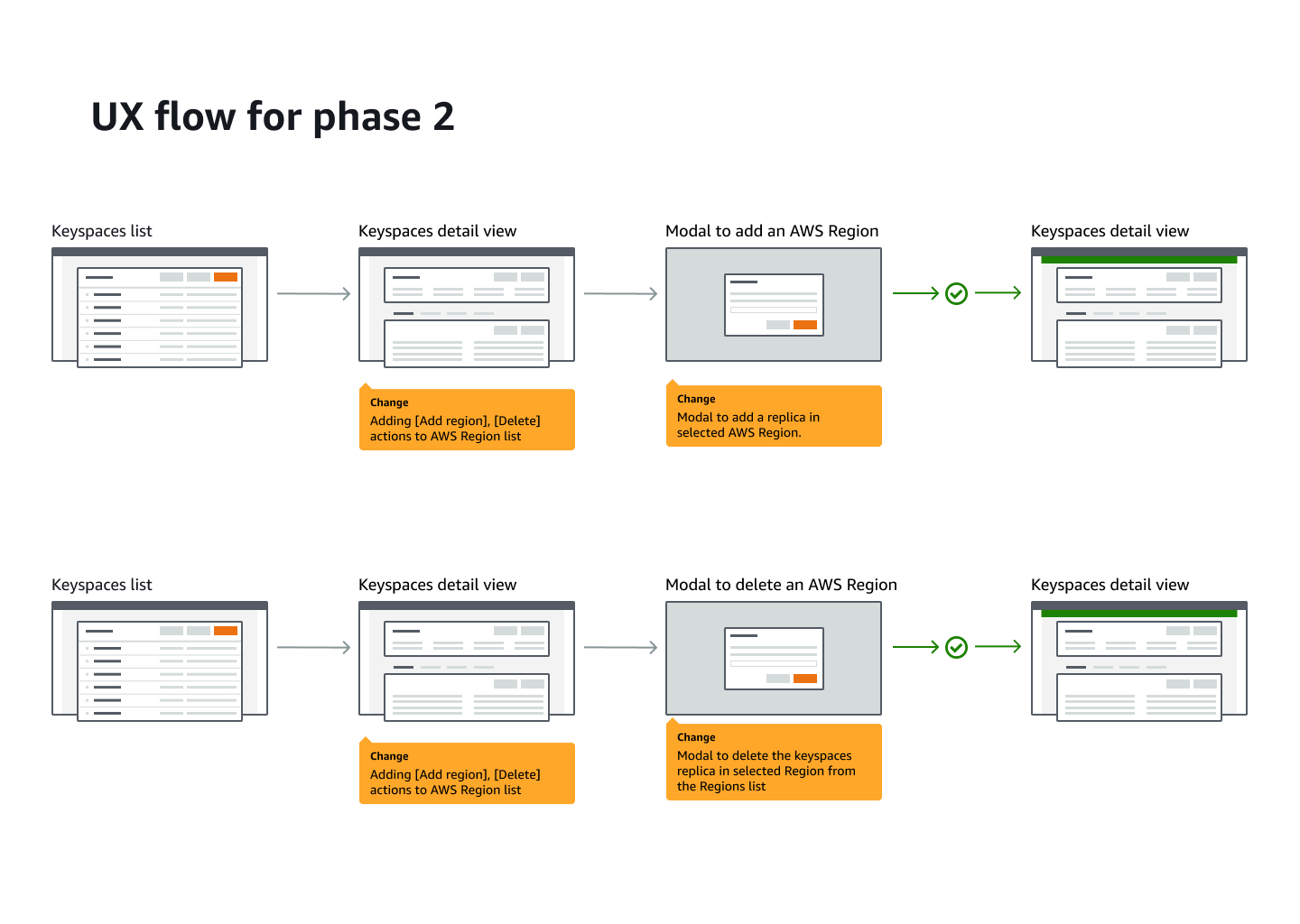

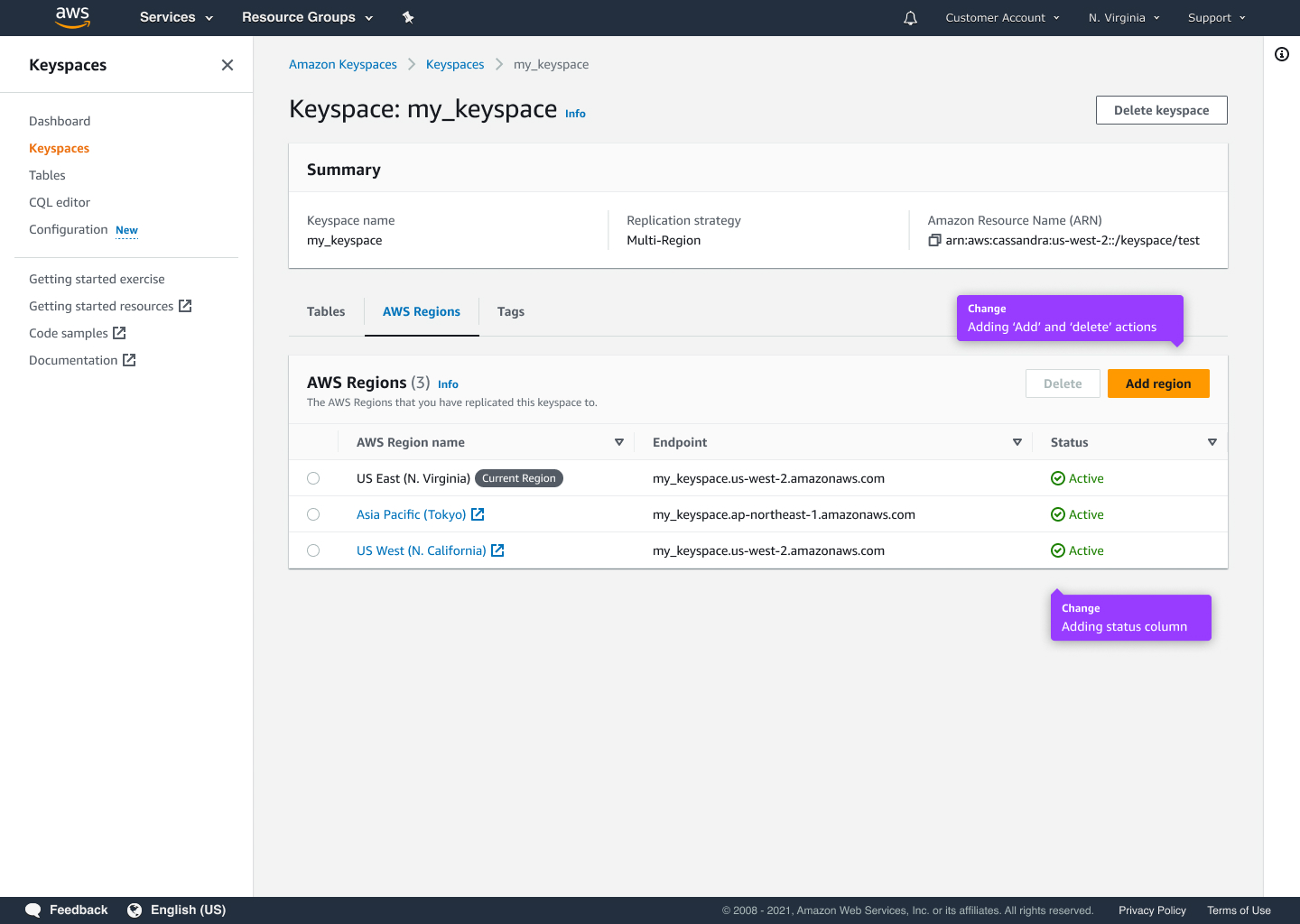

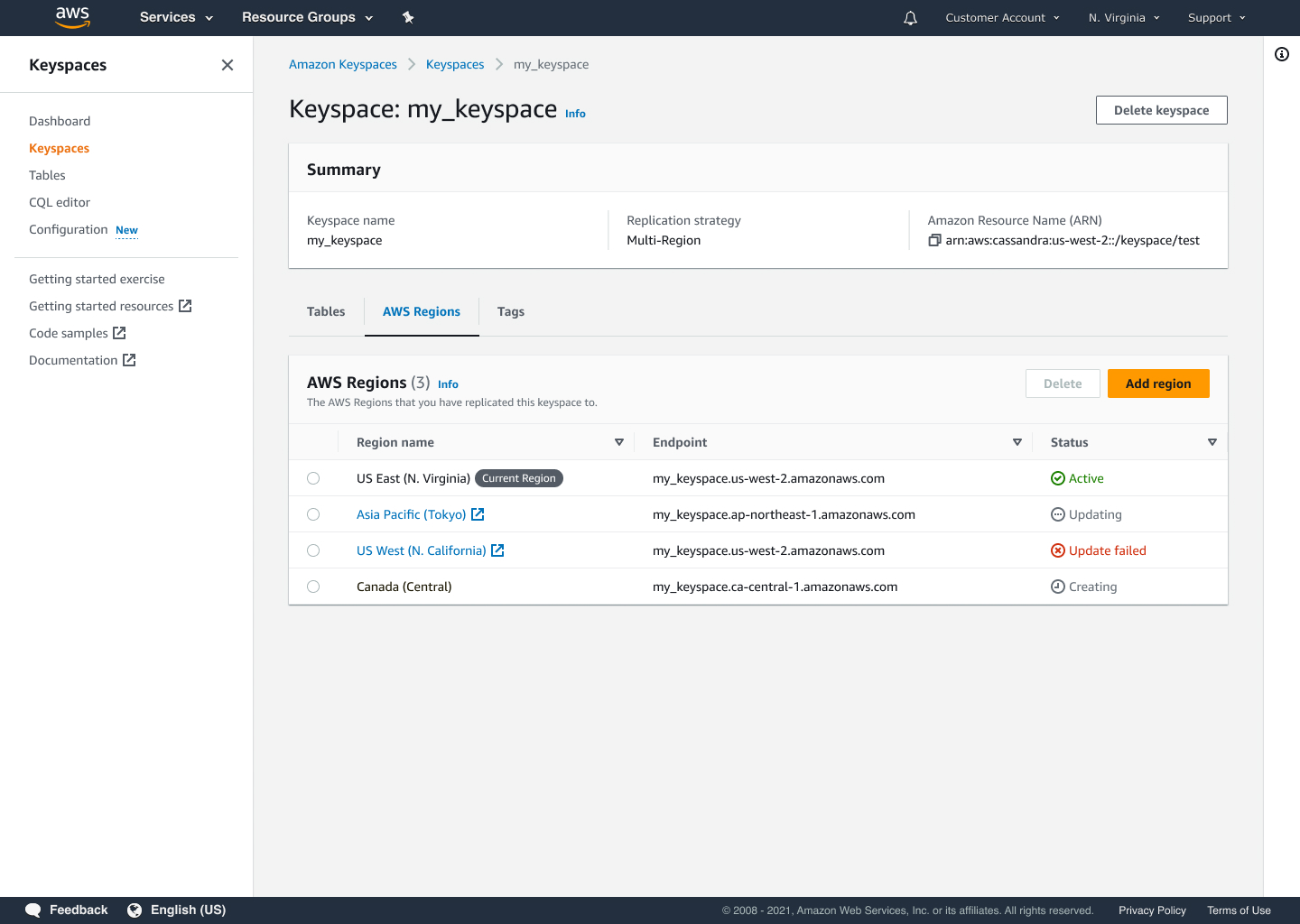

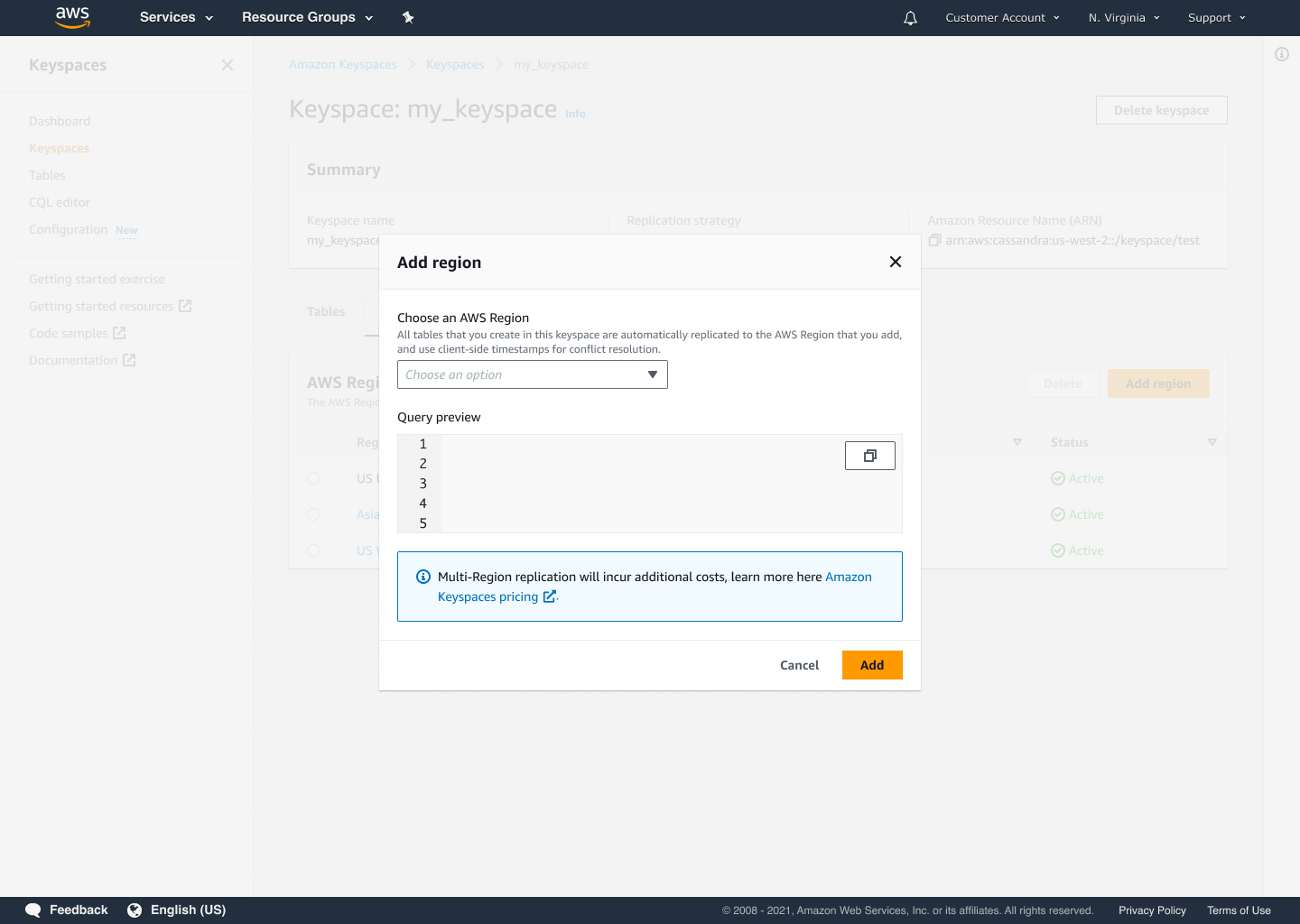

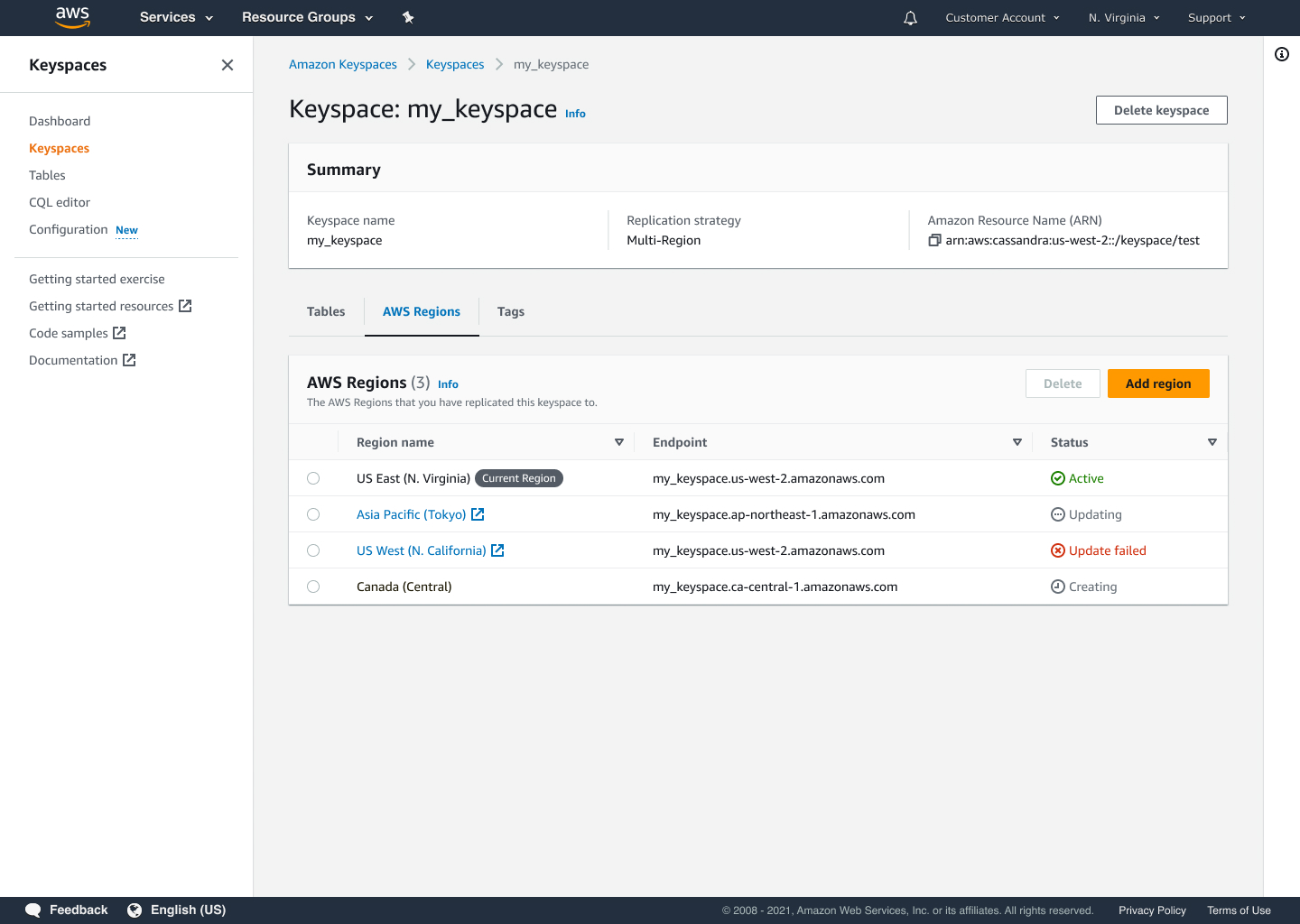

Phase 2: Management Features

- Dynamic region management for existing keyspaces (add/remove)

- Region-specific configuration controls

- Enhanced monitoring with data synchronization tracking

Challenges

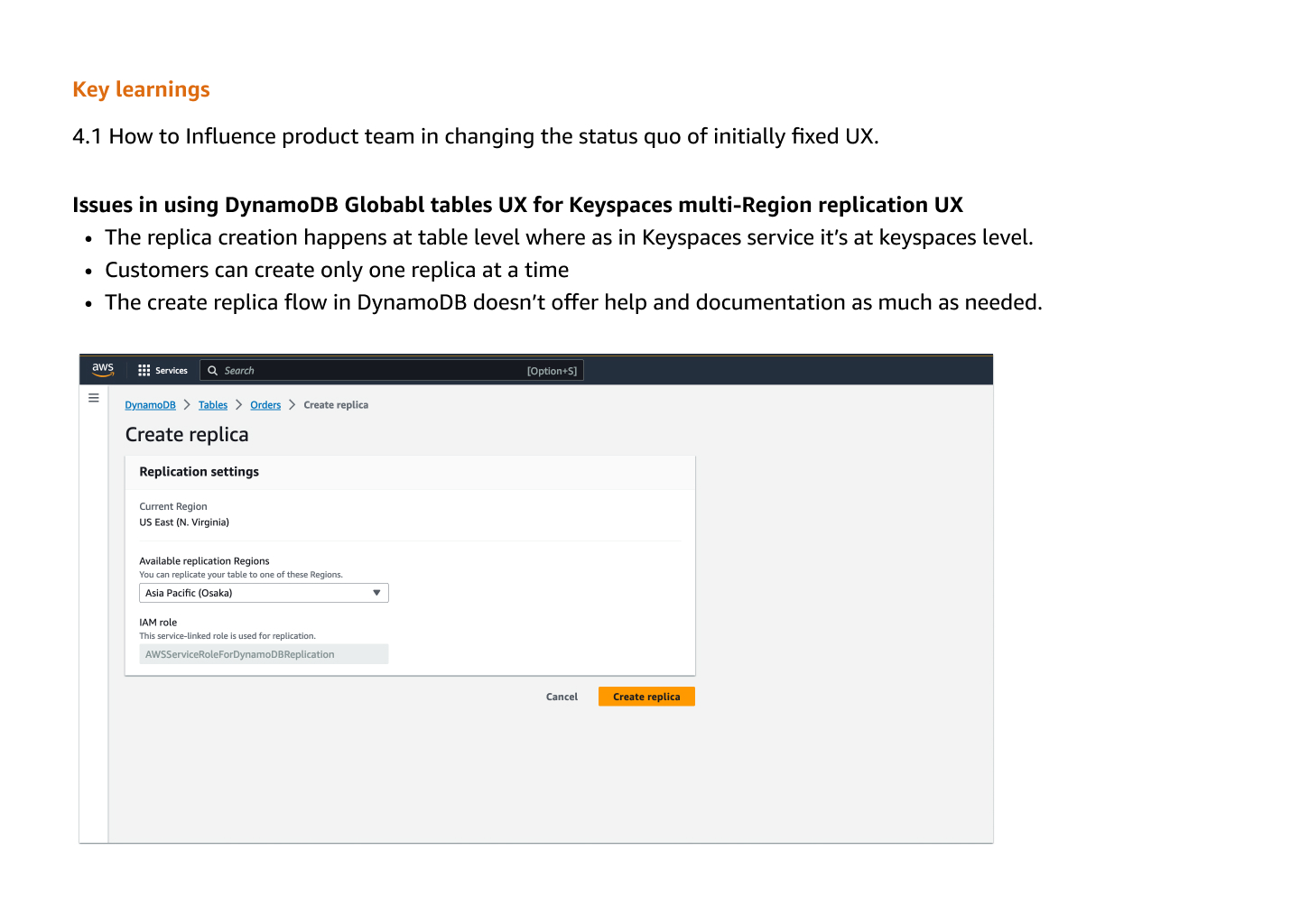

1. Breaking Status Quo UX Patterns

The initial proposal was to reuse DynamoDB's Global Tables UX pattern, but this presented several issues:

- Architectural Mismatch: DynamoDB handles replication at table level, while Keyspaces manages it at keyspace level

- UX Limitations: Global Tables only allowed single replica creation at a time, limiting bulk region setup

- Documentation Gaps: Insufficient help content and guidance in the creation flow

- User Mental Model: Different conceptual model between DynamoDB's table-level and Keyspace's database-level replication

Through user research and workflow analysis, we successfully advocated for a custom UX pattern that better matched Keyspaces' architecture and user needs.

2. Technical Integration Complexities

Working with the backend team on Phase 2 features revealed several challenges:

- API Limitations: Existing APIs weren't designed to provide region-level status information

- Service Architecture: Required significant backend changes to expose replication health metrics

- Cross-Team Coordination: Worked closely with backend team to modify API contracts for status monitoring

Impact

Phase 1 Launch Results

The launch of multi-region replication removed a major adoption blocker for Cassandra customers:

- Adoption: 12.5% of customers now use multi-region keyspaces

- Usage: Average of 45 multi-region keyspaces created monthly

- Migration: Enabled several large Cassandra customers to migrate to managed service

- Operational: Eliminated need for manual cross-region replication management